AI-Squared - Artificial Intelligence and Academic Integrity

At the outset, a number of universities around the world elected to ban the student use of ChatGPT (as well as other Generative AI Tools) in academic work based on the news that ChatGPT was capable of generating outputs of unexpected quality and sophistication. Academic integrity concerns, both real and imagined, were raised over how students might use ChatGPT inappropriately in their academic assessments (Shiri 2023). The majority of these concerns are premised on the view that Generative AI-tools have the potential to do more harm than good, and, that, when wielded by uninformed students, the technology’s impact ranges from quick ‘work-around’ to weapon (Sawahel 2023, Weisman 2023).

To AI or Not to AI?

While some individuals and institutions may view Generative AI with suspicion and as a threat to higher education, there are others who want to weigh the options from more nuanced perspectives. Many universities, for example, have put together task forces to make teaching in the context of AI a priority (Baucom, nd.; Black, 2023; Fox, 2023). When used ethically and in pedagogically sound ways, AI-tools can offer academics the chance to reconsider and reimagine an educational focus, not on deliverables and summative end-products (such as written assignments and standard exams) as measures of learning, but instead on process-driven and evaluated assessment. Stated another way, learning is not only about the product; learning is also about the process of acquiring new knowledge or learning ways to think and reason. This gives the instructor a window through which to focus on what students are ‘doing’ in their classes to develop the requisite disciplinary knowledge and allied critical-thinking abilities. While tools like ChatGPT are prone to fabrication (factually inaccurate outputs) and generating biases found in its data lakes, they can also help to deepen student engagement and enhance teaching, learning, and assessment (Mollick, 2023). Importantly, these technologies should be recognized as potential tools enabling increased accessibility to learning that will support a wider diversity of student needs than previously possible.

Therein lies another important consideration: AI and its various tools are well on their way to becoming omnipresent in our lives. Learning to adapt to AIs presence in our academic spaces is part of teaching today’s learners. To simply ban or avoid AI is to avoid the reality that U of A students are engaging and experimenting with (i.e. yes, like right now, as you read this) with and experiment with AI tools in our courses.

In order to do so, instructors are encouraged to experiment with different approaches, and to find ways to adapt and improve teaching and assessment to embrace the new reality of working and studying in a world where these emerging technologies are freely and widely available.

The suggestions provided in this section are in alignment with the initial Guidance proposed by the University of Alberta’s Provost’s Task Force on Artificial Intelligence and the Learning Environment (March 2, 2023).

Given the diversity of learning environments across our campuses, the general guidance that we can give includes the following:

- Have conversations with your students about your expectations regarding the use of Generative AI, particularly in your course assignments. If students are using Generative AI, how would you like them to indicate that to you (e.g. in the sources cited page, methodology section, prefatory comments, or in-text citation)? Please make sure that you also summarize these conversations in a written format and include them in eClass in a place where students will find them for those who may not have been in class. This also gives students a place to refer back to when completing assignments. Your Department or Faculty may also have specific guidance for you.

- Identify creative uses for Generative AI in your course (idea generation; code samples; creative application of course concepts; study assistance; language practice). Discuss the limitations of tools like ChatGPT in the topics covered by your course, including the limitation of data used (prior to 2021), factually inaccurate information, biases and discrimination in the data used to generate text and in the output, and the use of culturally inappropriate language and sources.

- *Remind students that the Code of Student Behaviour states: “No Student shall represent another’s substantial editorial or compositional assistance on an assignment as the Student’s own work.” Submitting work created by generative AI and not indicating such would constitute cheating as defined above.

- Stress to students the value of building their own voice, writing skills, and so on. Motivating students to share their ideas, perspectives, and voice may make generative AI less appealing. Similarly, asking students to share their reflections (reflective writing) can help reinforce student investment in the learning process. If instructors are equipped to do so, they can even show how generative AI can be used as a tool to aid in work as opposed to replacing student work.

- Remind students that AI tools such as ChatGPT gather significant personal data from users to share with third parties.

*Please note that the Code of Student Behaviour has been replaced. There is a new Student Academic Integrity Policy. For specific details about articial intelligence misuse please see Student Academic Integrity Policy Appendix A Academic Misconduct:

3. Contract Cheating

Using a service, company, website, or application to

a. complete, in whole or in part, any course element, or any other academic and/or scholarly activity, which the student is required to complete on their own; or

b. commit any other violation of this policy.

This includes misuse, for academic advantage, of sites or tools, including artificial intelligence applications, translation software or sites, and tutorial services, which claim to support student learning.

In order to help you think through the various options available to you, CTL suggests you start with the following questions as part of your decision-making process:

- What are your discipline's conventions and assumptions? How might students use AI to support their academic work in your discipline?

- What role, if any, do AI driven technologies in the course/classroom play in your personal teaching philosophy?

- Is assessment task redesign needed? How significant is this redesign and development? How do the new assessments fit and align with the course learning outcomes?

- What do you want your students to know about your expectations regarding AI and academic integrity?

- Which University resources would you like to direct your students to for further guidance if necessary?

- What kind of classroom environment would your students like to see? How might you include them in the conversation about AI use in academic work?

Where did you land with your responses to the above questions? Are you leaning towards experimenting with/integrating AI tools in your teaching? Or, do you plan not to use AI in your classroom but still allow students to use it for specific purposes in the course of their learning? Whatever your final decision, it is important that you be transparent and share this information with your students.

Let students know that the University of Alberta's most recent (September 2024) Student Academic Integrity Policy explicitly references Generative AI and its misuse for academic advantage. Instructors can consider this an act of cheating under the policy which states: "misuse, for academic advantage, of sites or tools, including artificial intelligence applications, translation software or sites, and tutorial services, which claim to support student learning."

If you are a NO, it is important for you to consider that not every use of AI tools by students may qualify as cheating – students might use the tools in ways that support and deepen their learning.

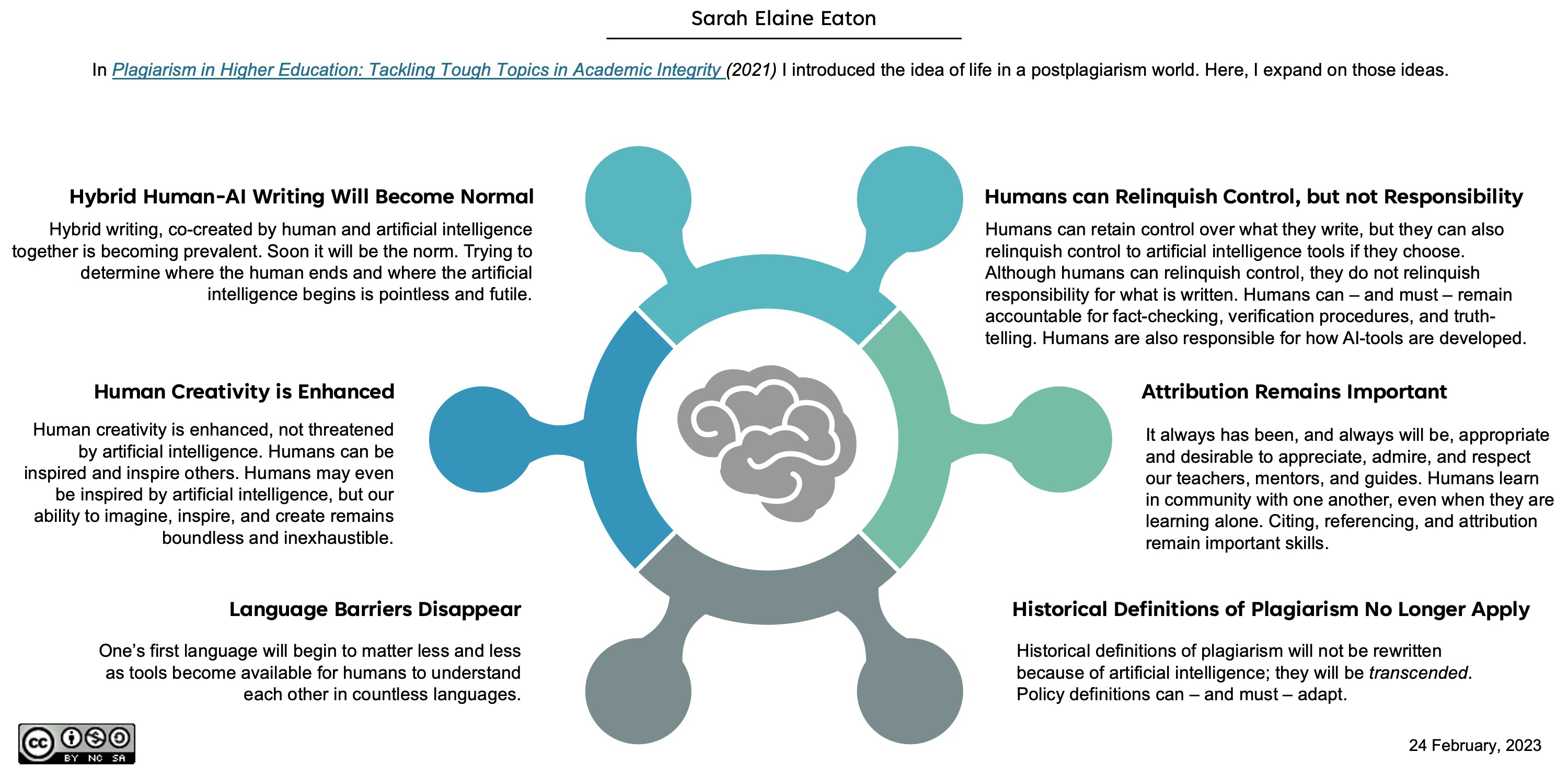

6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence

Responding to concerns about AI and writing, plagiarism, and academic integrity, Sarah E. Eaton contemplates a future in which we enter an era marked for “postplagiarism.” This will be a time when archaic print-based notions of copyright are set aside to make way for human-AI partnerships and new definitions of authorship and originality.

Although Eaton first put forward these ideas in her book, Plagiarism in Higher Education: Tackling Tough Topics in Academic Integrity (2021), she has recently revised her thinking and put forward 6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence.

Eaton identifies six notions that will likely come to characterize the age of postplagiarism:

- Hybrid Human-AI Writing Will Become Normal

- Human Creativity is Enhanced

- Language Barriers Disappear

- Humans can Relinquish Control, but not Responsibility

- Attribution Remains Important

- Historical Definitions of Plagiarism No Longer Apply

For more information, please also see her article, “Artificial intelligence and academic integrity, post-plagiarism” (2023).

Dialogue with Students

Begin with a conversation, in-person or synchronously, if possible, so you have the best opportunity to openly dialogue about your expectations and gauge your students’ responses. Talk to your students. The purpose of this initial dialogue is to share with them your expectations, and explore together in two-way conversation the possibilities and limitations of using Generative AI tools in the context of your course(s) and their academic work in your discipline. Speak to them about the academic integrity concerns that have been raised at the U of A and elsewhere in higher education. Where appropriate, encourage your students to ask questions, provide inputs, and offer suggestions. You might be surprised to discover instructors sometimes need to explore AI basics with their students too. Not all students will be up to date on the AI.

A few key questions to guide your conversation with your students include:

- What do you know about artificial intelligence and AI tools such as GPT-4, Midjourney, and Microsoft’s (GPT-powered) search engine, Bing?

- Have you used any of them before? Why?

- Have you used an AI tool for learning (specifically, in your academic work)?

- If so, how did you use them?

- How do you think you can ethically use AI tools to support your learning?

(Adapted from, Eaton, 2023)

This conversation is a great opportunity for you to discuss (September 2024) Student Academic Integrity Policy and Academic Misconduct with students, so, together, you can all consider the ethical implications and responsibilities.

If you plan to integrate AI into your in-person or hybrid courses, here are a couple of options you can use to continue the conversation:

- Create an AI-based Discussion forum to share in (and monitor) your students’ experiences and conversations about their use of AI tools.

- Create a Journal activity, and request that your students transparently track and reflect on their use of AI-tools as part of their learning process during your class.

Sources

- Baucom, I. (n.d.). New Task Force will Consider Generative AI and TEaching & Learning. Office of the Executive Vice President and Provost. University of Virginia. https://provost.virginia.edu/task-force-generative-ai-and-teaching-learning

- Eaton, S. (2023, February 25). Sarah’s thoughts: 6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence, Teaching, and Leadership. https://drsaraheaton.wordpress.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

- Eaton, S. (2023). Teaching and learning with artificial intelligence apps. University of Calgary. https://taylorinstitute.ucalgary.ca/teaching-with-AI-apps

- Sawahel, W. (2023, February 7). Embrace it or reject it? Academics disagree about ChatGPT. University World News. https://www.universityworldnews.com/post.php?story=20230207160059558

- Shiri, Ali. (2023, Feb 2) ChatGPT and Academic Integrity February. Information Matters https://informationmatters.org/2023/02/chatgpt-and-academic-integrity/

- Weissman, J. (2023, February 9). ChatGPT is a plague upon education. Inside Higher Ed. https://www.insidehighered.com/views/2023/02/09/chatgpt-plague-upon-education-opinion