Reinforcement Learning

Harnessing the full potential of AI requires adaptive learning systems; this is exactly what reinforcement learning (RL) does by design: improve through trial-and-error interaction.

Register today

Sign up on Coursera now to begin working towards your specialization certificate.

About the Specialization

The Reinforcement Learning Specialization consists of 4 courses exploring the power of adaptive learning systems and artificial intelligence (AI).

By the end of this Specialization, learners will understand the foundations of much of modern probabilistic AI and be prepared to take more advanced courses, or to apply AI tools and ideas to real-world problems. This content will focus on "small-scale" problems in order to understand the foundations of Reinforcement Learning.

The tools learned in this Specialization can be applied to:

- AI in game development,

- IOT devices,

- Clinical decision making,

- Industrial process control,

- Finance portfolio balancing,

- & more.

Learning Outcomes

After completing this course, students will be able to:

- Build a RL system that knows how to make automated decisions

- Understand how RL relates and fits into the broader umbrella of machine learning, deep learning, supervised and unsupervised learning

- Understand the space of RL algorithms (Temporal Difference learning, Monte Carlo, Sarsa, Q-learning, Policy Gradient, Dyna, and more)

- Understand how to formalize your task as a RL problem, and how to begin implementing a solution

Specialization Format

Prerequisites:

Experience and comfort programming in Python required. Must be comfortable converting algorithms and pseudocode into Python. Basic understanding of concepts from statistics (distributions, sampling, expected values), linear algebra (vectors and matrices), and calculus (computing derivatives).

Course Modules:

Specialization consists of 4 courses comprising a total of 17 modules (not including welcome modules). Time Commitment: 2-3 hours/week over 4-6 months.

Certificate provided upon completion of Specialization.

Take the specialization & gain insight into the importance of Reinforcement Learning

Instructors

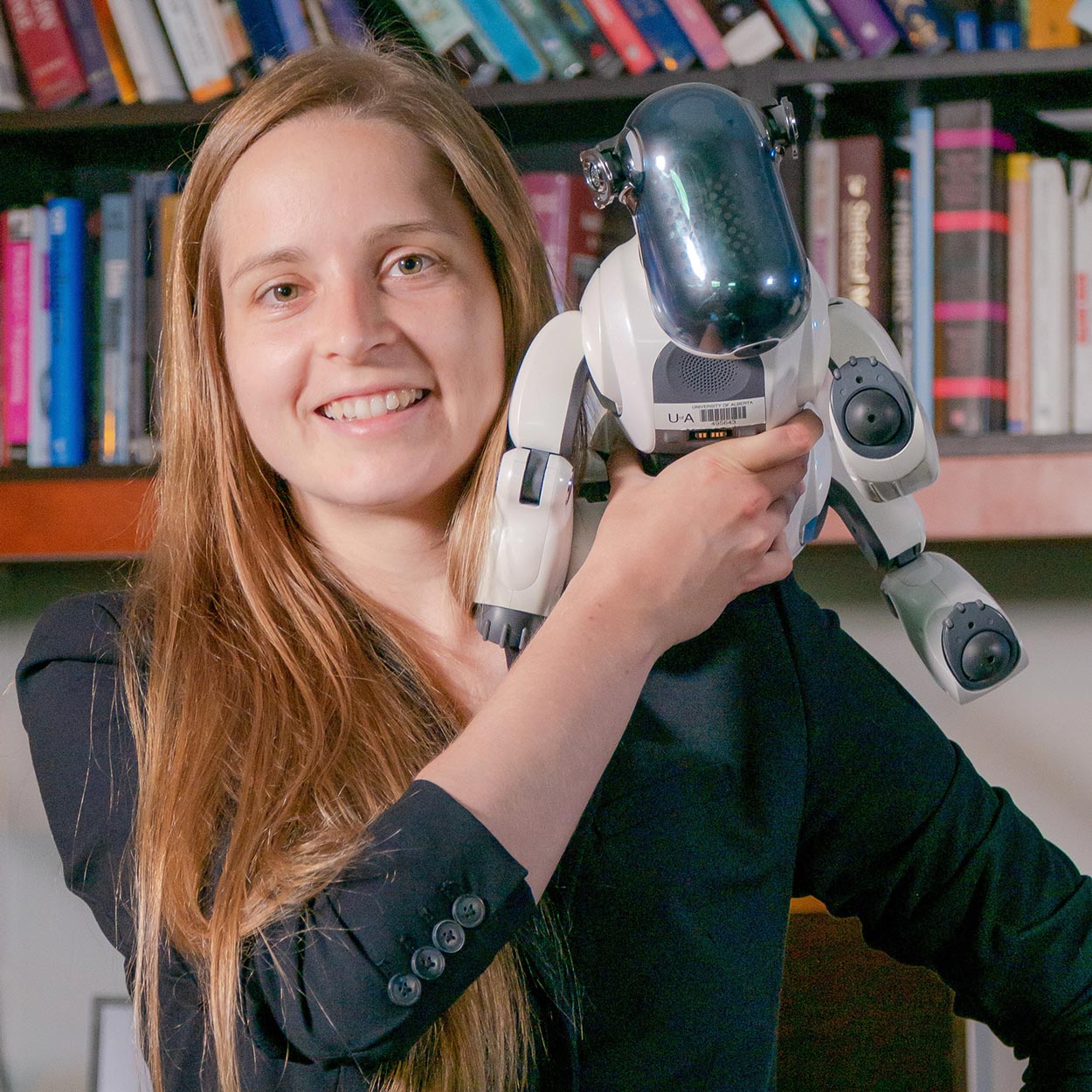

Martha White, Assistant Professor

Department of Computing Science, University of Alberta

Martha White is an Assistant Professor in the Department of Computing Sciences at the University of Alberta, Faculty of Science. Her research focus is on developing algorithms for agents continually learning on streams of data, with an emphasis on representation learning and reinforcement learning. Martha is a PI of AMII---the Alberta Machine Intelligence Institute and a director of RLAI---the Reinforcement Learning and Artificial Intelligence Lab at the University of Alberta. She enjoys soccer, the outdoors, cooking and especially reading sci-fi.

Adam White, Assistant Professor

Department of Computing Science, University of Alberta

Adam White is an Assistant Professor in the Department of Computing Sciences at the University of Alberta, Faculty of Science, and a Senior Research Scientist at DeepMind. Adam's research focuses on the problem of Artificial Intelligence, specifically how to replicate or simulate human-level intelligence in physical and simulated agents. His research program explores how the problem of intelligence can be modelled as a reinforcement learning agent interacting with some unknown environment, learning from a scalar reward signal rather than explicit feedback. Adam has taught Reinforcement Learning and Artificial Intelligence at the graduate and undergraduate levels, at both the University of Alberta and Indiana University. Outside of teaching and research Adam spends his time playing Gaelic Football, and exploring the natural world.

Syllabus

Course 1 - Fundamentals of Reinforcement Learning

This course teaches you the key concepts of Reinforcement Learning, underlying classic and modern algorithms in RL. You will be introduced to statistical learning techniques where an agent explicitly takes actions and interacts with the world. Understanding the importance and challenges of learning agents that make decisions is vital today, with more and more companies interested in interactive agents and intelligent decision-making.

After completing this course, you will be able to start using RL for real problems, where you have or can specify the Markov Decision Processes.

Course Modules

- Module 0: Welcome to the Course

- Module 1: The K-Armed Bandit Problem

- Module 2: Markov Decision Processes

- Module 3: Value Functions & Bellman Equations

- Module 4: Dynamic Programming

Course 2 - Sample Based Learning Methods

In this course, you will learn about several algorithms that can learn near optimal policies based on trial and error interaction with the environment—learning from the agent's own experience. Learning from actual experience is striking because it requires no prior knowledge of the environment's dynamics, yet can still attain optimal behavior. We will cover intuitively simple but powerful Monte Carlo methods, and temporal difference learning methods including Q-learning. We will wrap up this course investigating how we can get the best of both worlds: algorithms that can combine model-based planning (similar to dynamic programming) and temporal difference updates to radically accelerate learning.

Course Modules

- Module 0: Introduction to the course

- Module 1: Monte Carlo Methods for Prediction & Control

- Module 2: Temporal Difference Learning Methods for Prediction

- Module 3: Temporal Difference Learning Methods for Control

- Module 4: Planning, Learning, & Acting

Course 3 - Prediction and Control with Function Approximation

In this course, you will learn how to solve problems with large, high-dimensional, and potentially infinite state spaces. You will see that estimating value functions can be cast as a supervised learning problem—function approximation—allowing you to build agents that carefully balance generalization and discrimination in order to maximize reward. We will begin this journey by investigating how our policy evaluation or prediction methods like Monte Carlo and TD can be extended to the function approximation setting. You will learn about feature construction techniques for RL, and representation learning via neural networks and backprop. We conclude this course with a deep-dive into policy gradient methods; a way to learn policies directly without learning a value function. In this course you will solve two continuous-state control tasks and investigate the benefits of policy gradient methods in a continuous-action environment. This course strongly builds on the fundamentals of Courses 1 and 2.

Course Modules

- Module 0: Introduction to the course

- Module 1: On-policy Prediction with Approximation

- Module 2: Construction Features for Prediction

- Module 3: Control with Approximation

- Module 4: Policy Gradient

Course 4 - A Complete Reinforcement Learning System (Capstone)

In this final course, you will put together your knowledge from Courses 1, 2 and 3 to implement a complete RL solution to a problem. This capstone will let you see how each component—problem formulation, algorithm selection, parameter selection and representation design—fits together into a complete solution, and how to make appropriate choices when deploying RL in the real world. This project will require you to implement both the environment to stimulate your problem, and a control agent with Neural Network function approximation. In addition, you will conduct a scientific study of your learning system to develop your ability to assess the robustness of RL agents. To use RL in the real world, it is critical to (a) appropriately formalize the problem as an MDP, (b) select appropriate algorithms, (c ) identify what choices in your implementation will have large impacts on performance and (d) validate the expected behaviour of your algorithms. This capstone is valuable for anyone who is planning on using RL to solve real problems.

Course Modules

- Module 0: Welcome to the Capstone

- Module 1: Milestone 1: Formalize Word Problem as MDP

- Module 2: Milestone 2: Choosing the Right Algorithm

- Module 3: Milestone 3: Identify Key Performance Parameters

- Module 4: Milestone 4: Implement Your Agent

- Module 5: Submit Your Parameter Study and Course Wrap Up